Weeks 4&5 (Ch06)

Sensory Components of Motor Control

2025-10-05

Presentation Viewing Recommendations

- This presentation is better viewed in a web browser, either on a computer or tablet. I do not recommend using mobile phones.

- Use keyboard shortcuts for navigation (e.g., arrow keys, spacebar).

- Use keyboard key “A” to pause/play audio on the current slide.

- The audio will automatically pause when you navigate to a different slide.

- Use keyboard key “S” for different viewing modes.

- If you issues with audio playback and captions, try a different web browser (e.g., Chrome, Firefox, Safari).

The PDF version of this presentation can be downloaded from the Download link at the top.

Objectives

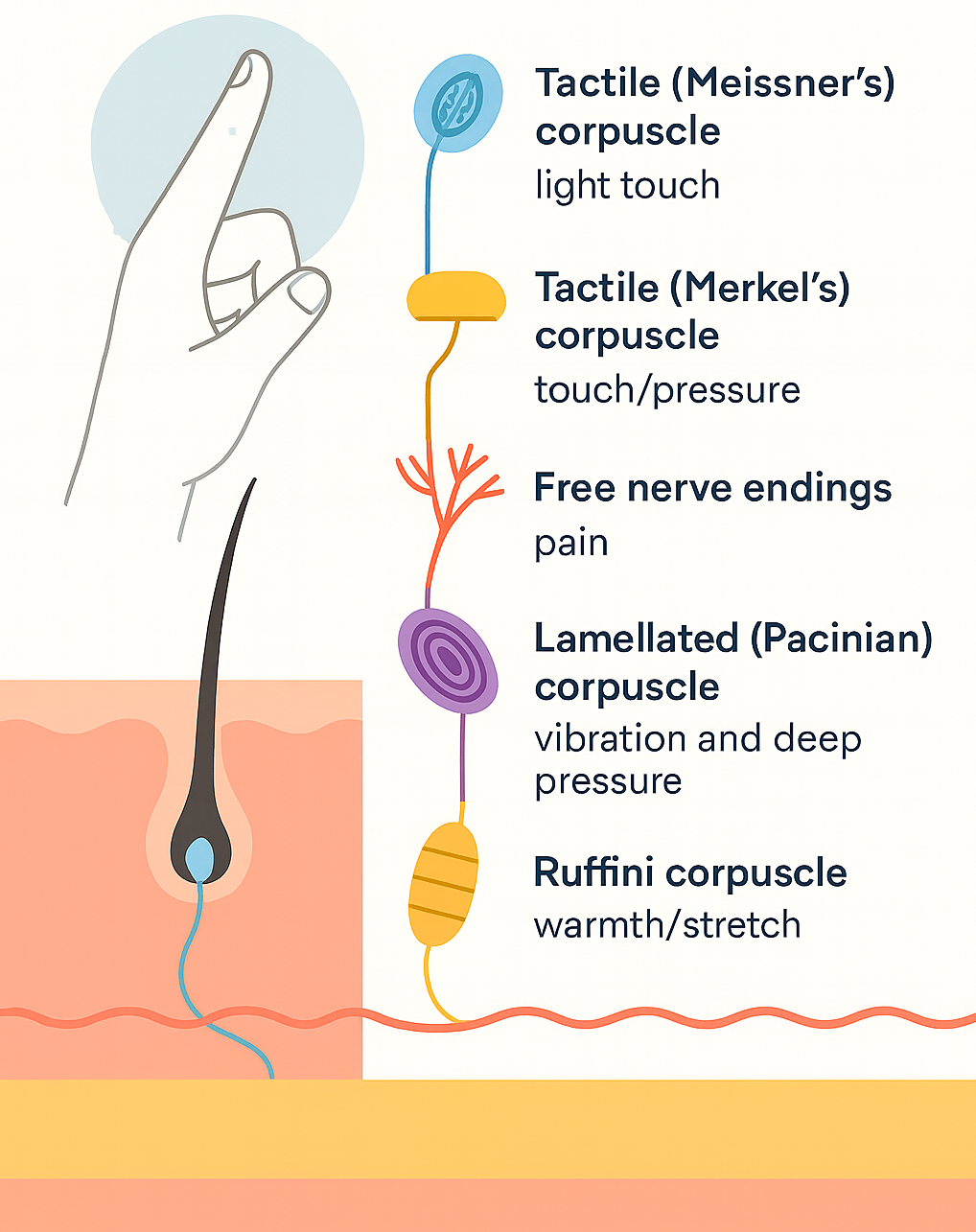

- Identify skin receptors that provide tactile information to the CNS

- Explain how tactile feedback affects accuracy, consistency, timing, and force

- Identify proprioceptors and what they signal to the CNS

- Describe classic methods to study proprioception (e.g., deafferentation, tendon vibration)

- Summarize key eye anatomy and visual pathways for motor control

- Explain methods to study vision in action (eye tracking, occlusion)

- Discuss binocular vs. monocular, central vs. peripheral vision

- Describe perception–action coupling, online visual corrections, and tau

Objectives 1-4

- Identify skin receptors that provide tactile information to the CNS

- Explain how tactile feedback affects accuracy, consistency, timing, and force

- Identify proprioceptors and what they signal to the CNS

- Describe classic methods to study proprioception (e.g., deafferentation, tendon vibration)

- You can study for the objectives using our StudyApp.

0.1 🌐 Introduction

- Sensory information is fundamental in all major theories of motor control & learning

- Roles across the action timeline:

- Pre-movement → specify parameters of action

- Online (during movement) → provide feedback for adjustments

- Post-movement → evaluate goal achievement

- Pre-movement → specify parameters of action

- Focus in this section:

- ✋ Touch (tactile system)

- 🌀 Proprioception

- 👀 Vision (fully covered in objectives 5–8) - coming soon.

- ✋ Touch (tactile system)

0.2 ✋ Touch & Motor Control: Overview

- Touch provides essential feedback for achieving action goals in daily skills

- Skin receptors (mechanoreceptors):

- Located in the dermis

- Densest in fingertips → support precision

- Signal pressure, stretch, vibration, temperature, pain

- Located in the dermis

- Critical for:

- Object manipulation (e.g., grasping, typing, playing piano)

- Interactions with people/environment (e.g., walking, sports)

- Object manipulation (e.g., grasping, typing, playing piano)

0.3 Neural Basis of Touch (at a glance)

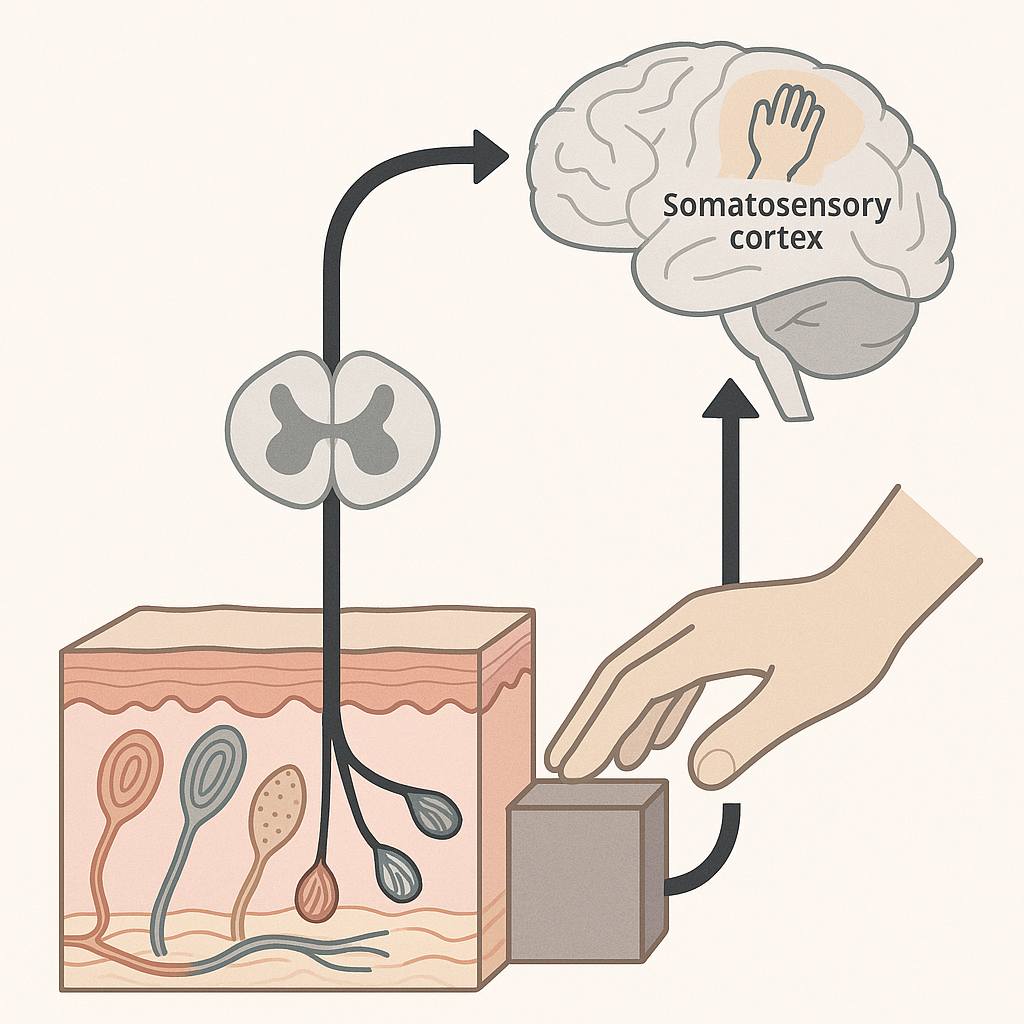

- Mechanoreceptors in the skin transduce deformation into neural signals

- Tactile information travels via ascending somatosensory pathways

- Signals reach the somatosensory cortex, integrating with motor areas

- This feedback enables action planning, adjustment, and control

0.4 🖐️ Roles of Tactile Information in Motor Control

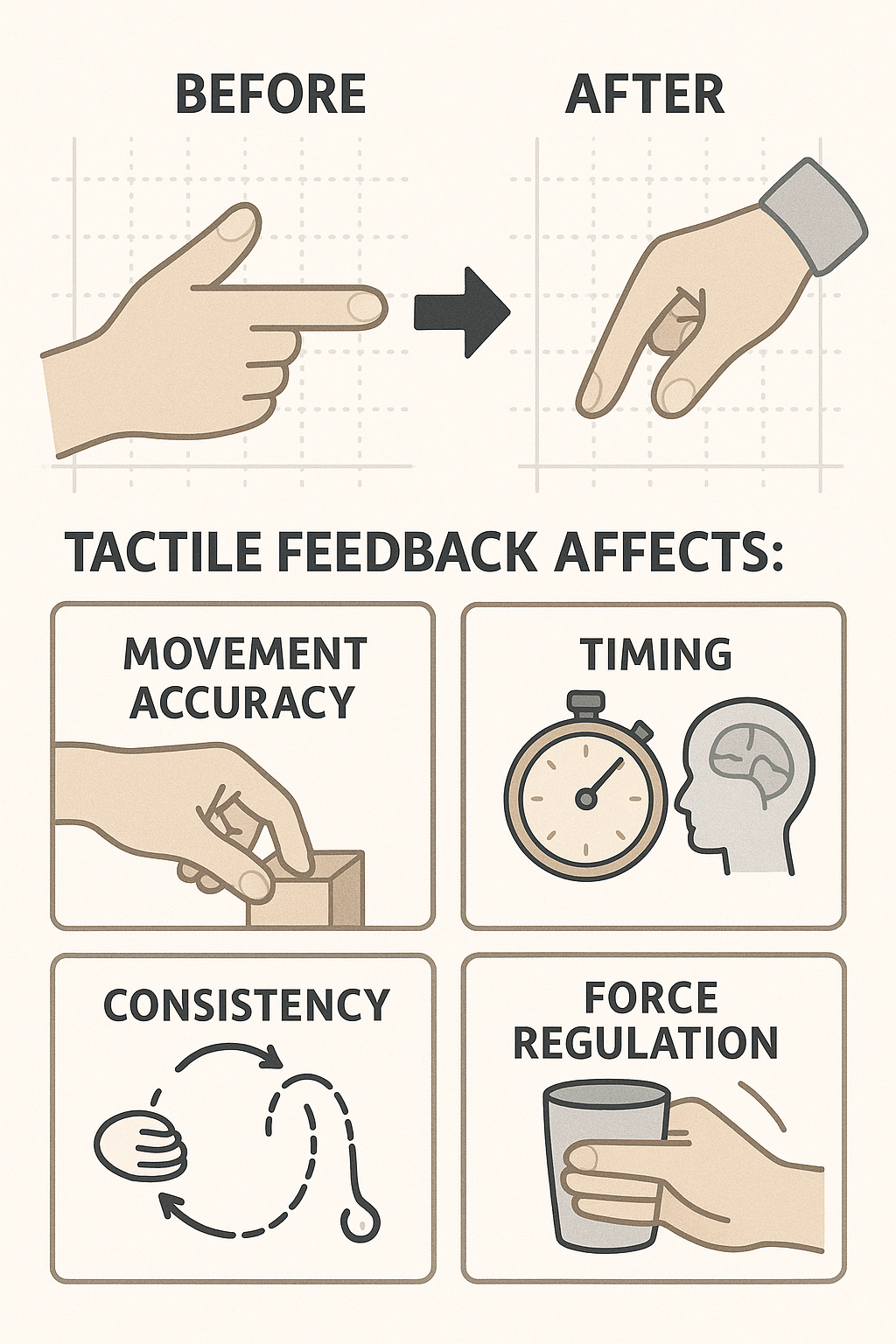

- Experimental approach: Compare motor performance before and after anesthetizing fingertips

- Tactile (cutaneous) feedback affects:

- ✅ Movement accuracy — especially in pointing, grasping, and fine motor skills

- 🔁 Consistency — reduces variability in repeated movements

- ⏱️ Timing — crucial for rhythmic actions and phase transitions (e.g., tapping, circle drawing)

- 🧠 Force regulation — helps scale grip force and adjust mid-movement (e.g., lifting a cup)

- ✅ Movement accuracy — especially in pointing, grasping, and fine motor skills

Tactile input supports precision, rhythm, and adaptability in everyday and skilled movements.

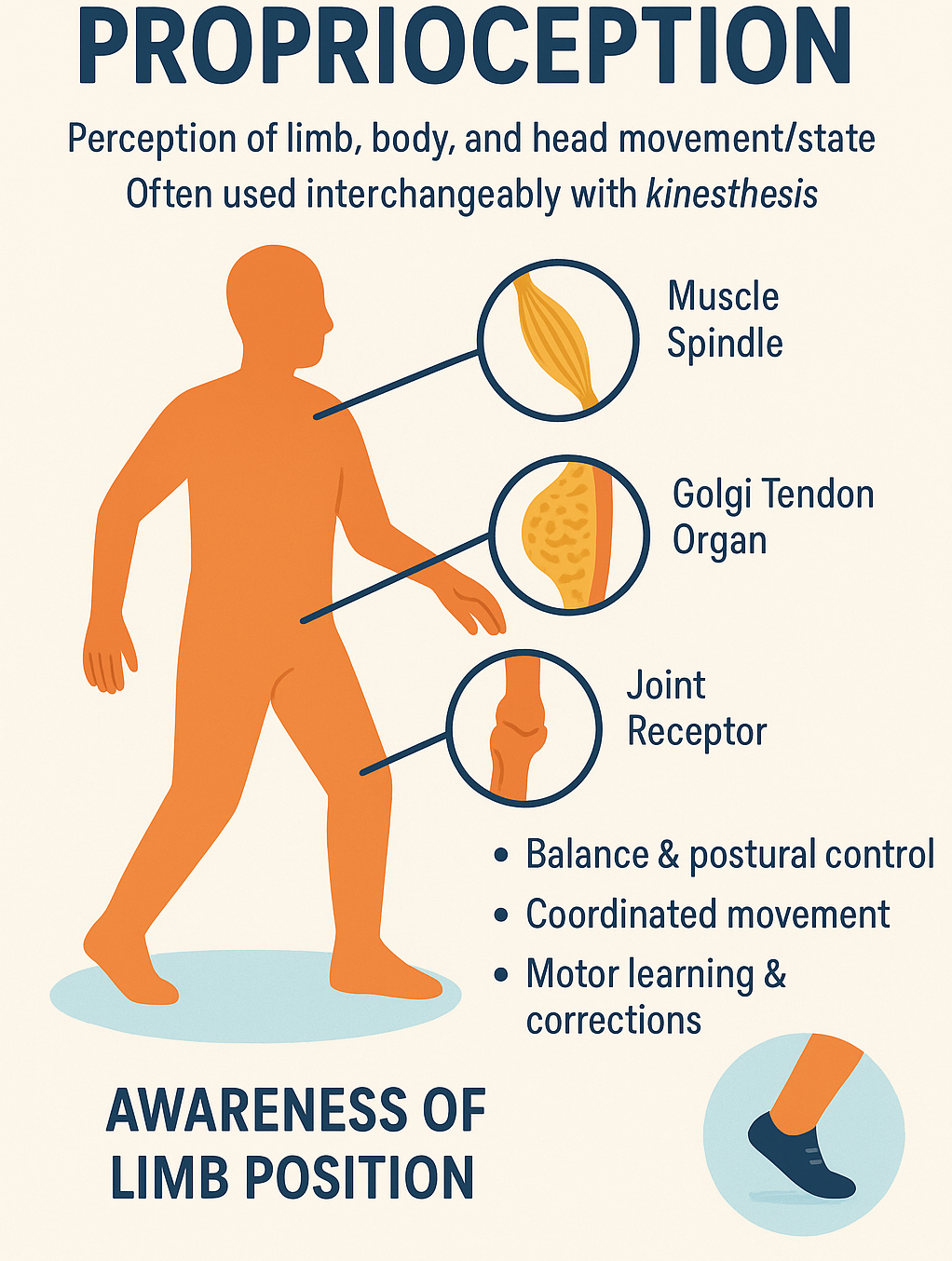

0.5 Proprioception: Definition

Proprioception is the body’s ability to sense the position, movement, and force of limbs, trunk, and head — even without visual input.

- Sometimes used interchangeably with kinesthesis

- Involves feedback from muscle spindles, Golgi tendon organs, and joint receptors

- Critical for:

- Balance and postural control

- Coordinated movement

- Motor learning and corrections

📌 Essential for executing movements like walking, reaching, and grasping — even in the dark!

0.6 🧩 Neural Basis of Proprioception

- Proprioceptors are sensory neurons located in:

- Muscles, tendons, ligaments, and joints

- Muscles, tendons, ligaments, and joints

- They provide continuous feedback about limb position, movement, and force

- Three primary classes:

- Muscle spindles → detect changes in muscle length & velocity

- Golgi tendon organs (GTOs) → detect muscle tension/force

- Joint receptors → detect joint angle & movement at extremes of motion

- Muscle spindles → detect changes in muscle length & velocity

- Proprioceptive signals travel via the dorsal column-medial lemniscal pathway to the somatosensory cortex

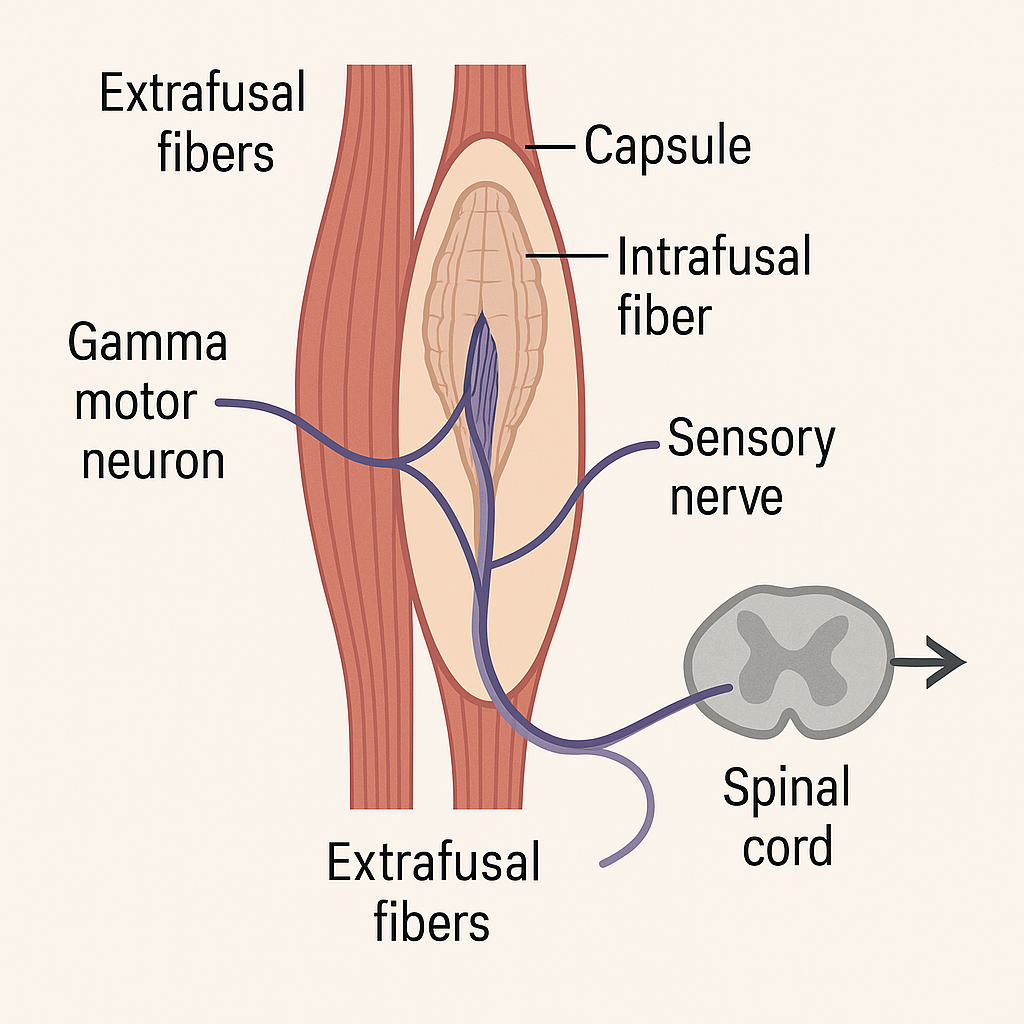

0.7 💪 Muscle Spindles (1)

- Encapsulated intrafusal fibers located within most skeletal muscles

- Arranged in parallel with force-generating extrafusal fibers

- Sensory endings (type Ia afferents) wrap around the central region → detect muscle length & velocity

- Innervated by gamma motor neurons (fusimotor system) → maintain spindle sensitivity during contraction

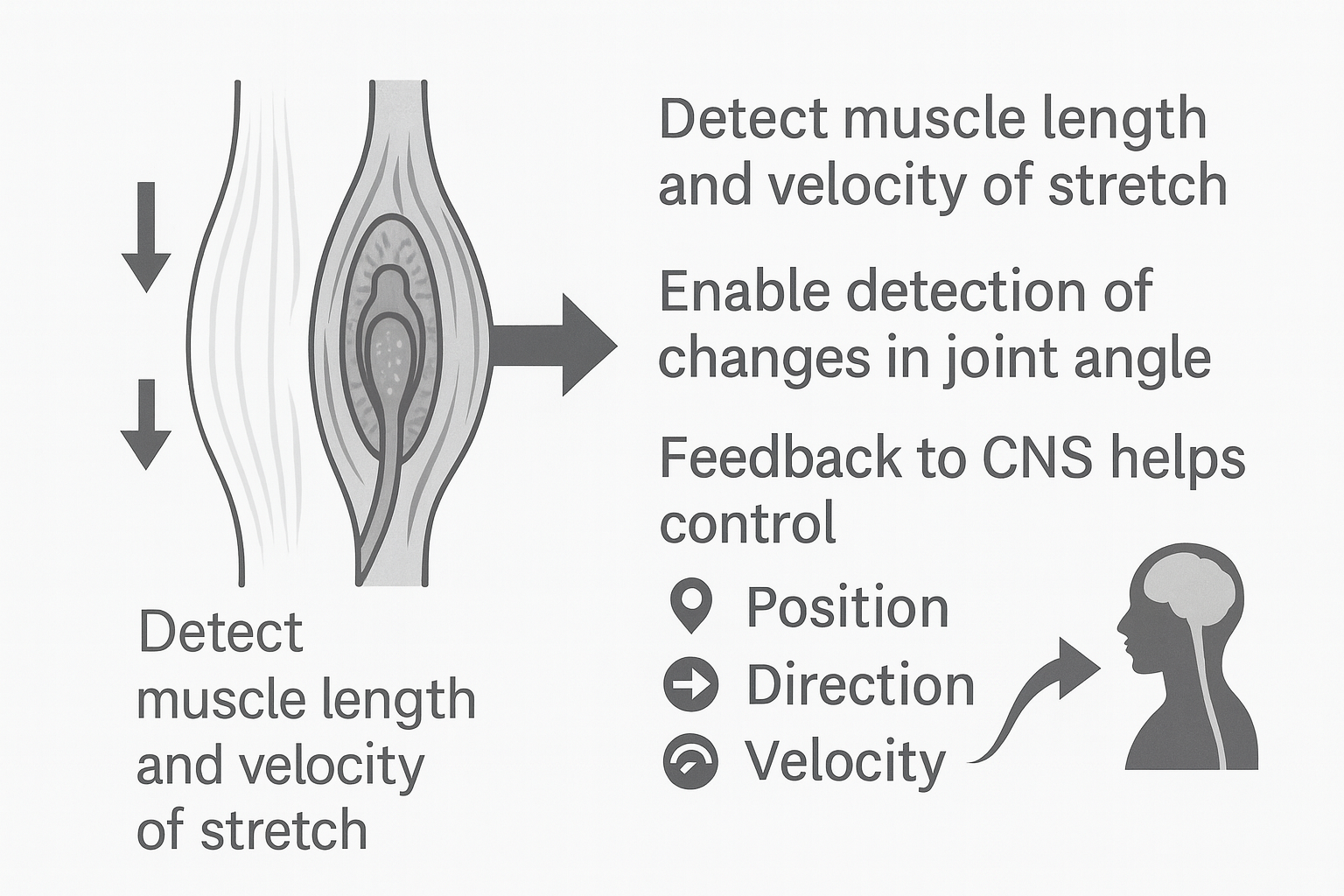

0.8 🌀 Muscle Spindles (2)

- Detect muscle length and velocity of stretch

- Provide sensory basis for joint angle changes

- Continuous feedback to CNS supports control of:

- Position (limb placement in space)

- Direction (movement trajectory)

- Velocity (speed of movement)

- Position (limb placement in space)

- Critical for both movement correction and planning

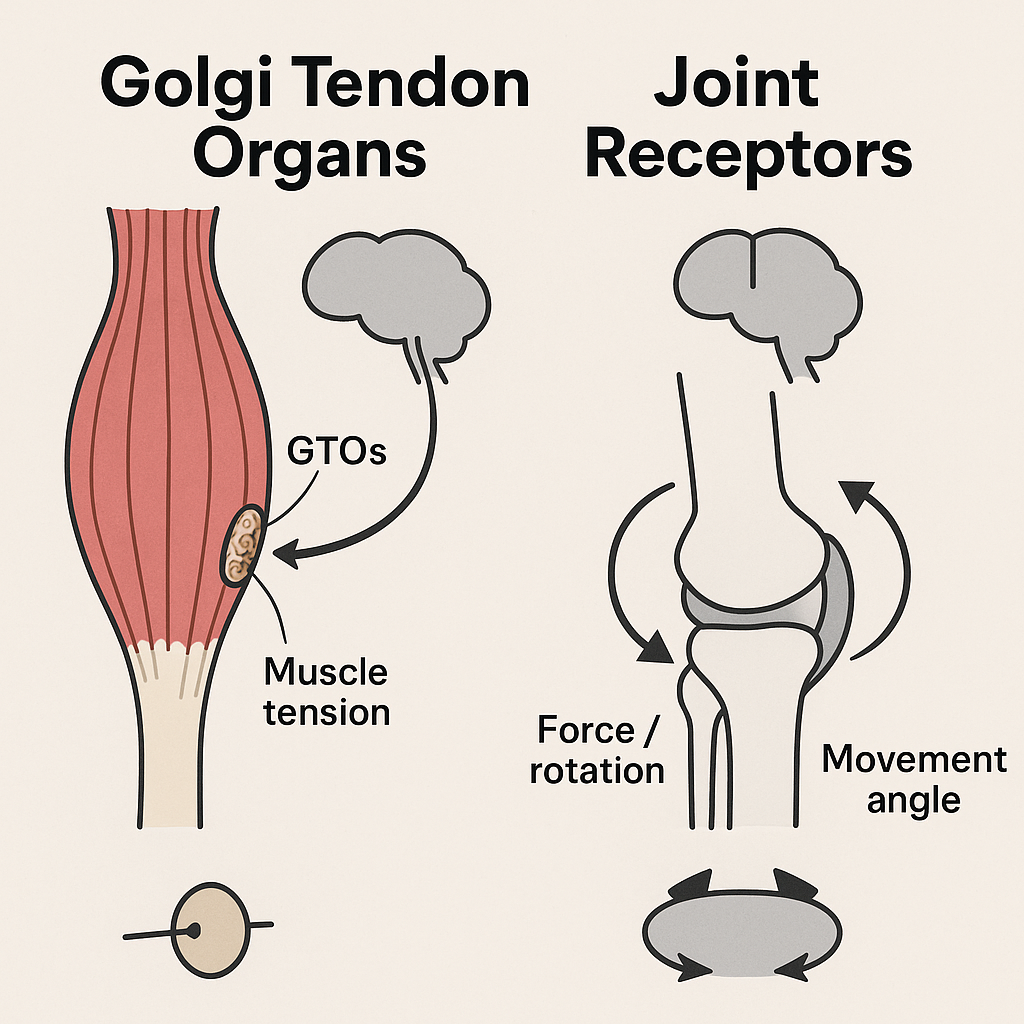

0.9 ⚖️ Golgi Tendon Organs & Joint Receptors

- Golgi Tendon Organs (GTOs):

- Located near tendon insertions in skeletal muscle

- Detect muscle tension / force (not length)

- Provide inhibitory feedback to prevent excessive force

- Located near tendon insertions in skeletal muscle

- Joint Receptors:

- Found in joint capsules and ligaments

- Detect force, rotation, and movement angle

- Especially sensitive at end ranges of motion

- Include Ruffini endings, Pacinian corpuscles, and Golgi-like receptors

- Found in joint capsules and ligaments

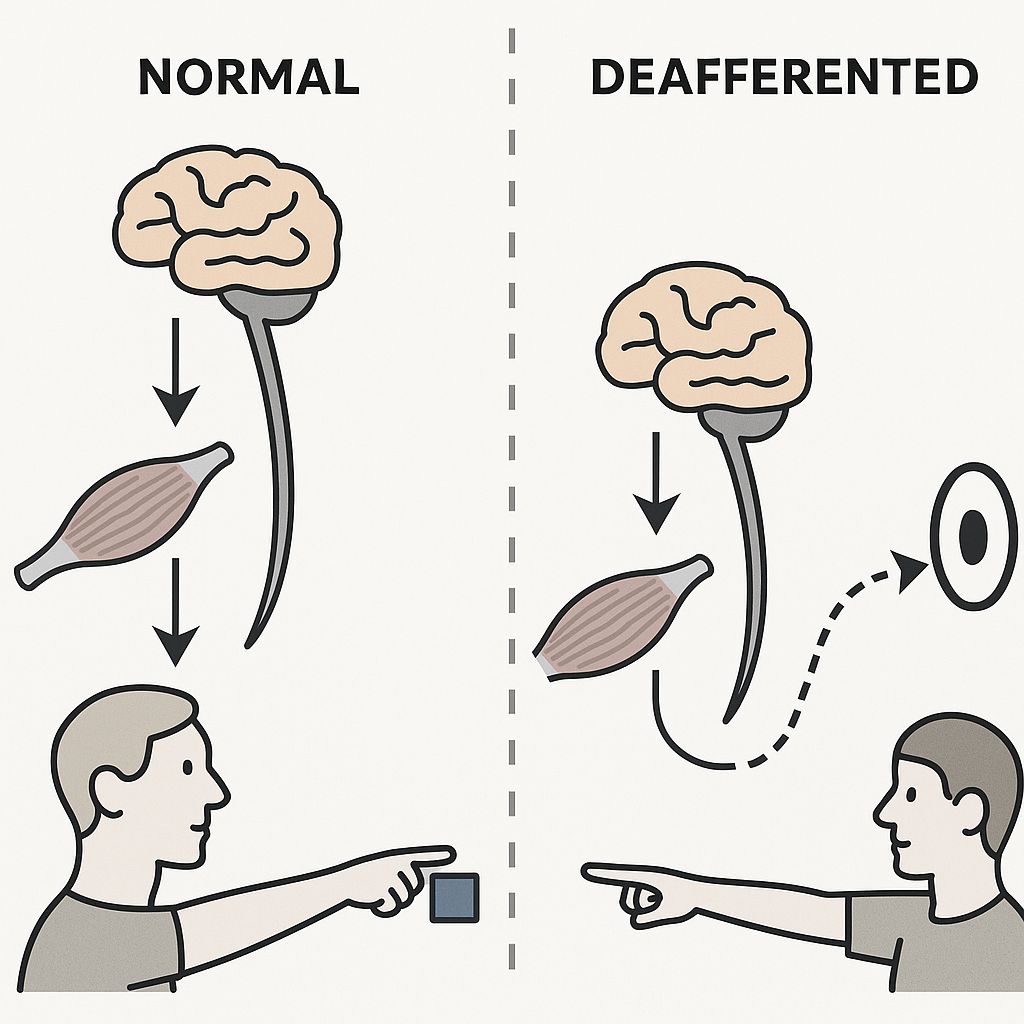

0.10 🧪 Investigating Proprioception: Deafferentation

- Surgical deafferentation

- Afferent pathways severed or removed (animal studies, rare clinical cases)

- Used to study how loss of proprioceptive input alters movement control

- Afferent pathways severed or removed (animal studies, rare clinical cases)

- Sensory neuropathy (peripheral neuropathy)

- Loss of large myelinated afferents → profound proprioceptive deficits

- Pain & temperature sensation often preserved

- Movements show spatial errors, poor smoothness, and lack of coordination

- Loss of large myelinated afferents → profound proprioceptive deficits

- Research example: Blouin et al. (1993) cited in Magill & Anderson (2017)

- Compared deafferented patient vs. healthy controls in a pointing task

- With vision: performance nearly normal

- Without vision: patient consistently undershot targets

- Compared deafferented patient vs. healthy controls in a pointing task

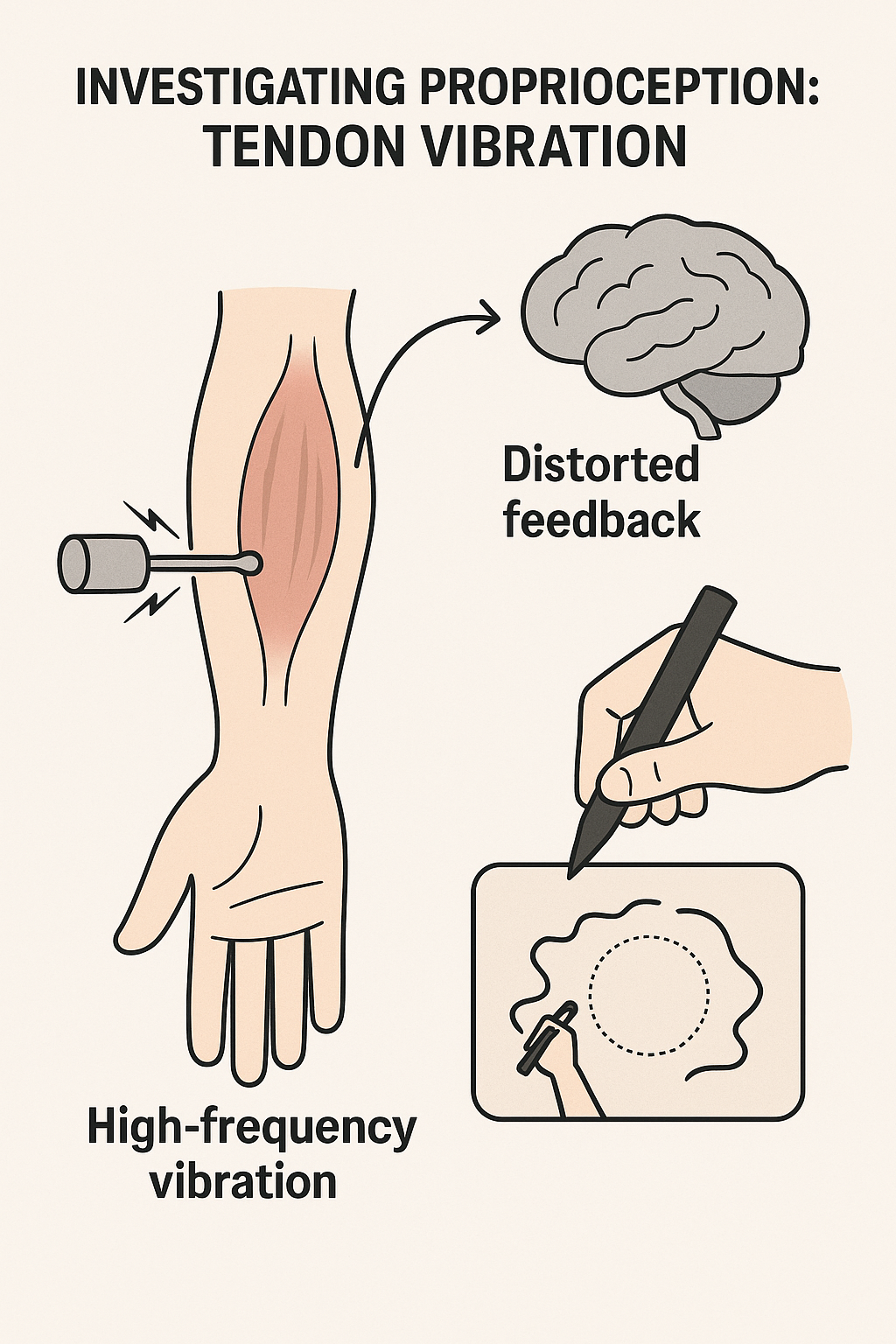

0.11 🎵 Investigating Proprioception: Tendon Vibration

Method: Apply high-frequency vibration to the tendon of an agonist muscle

Effect: Distorts proprioceptive feedback → creates illusory lengthening of the muscle

Unlike deafferentation, feedback is altered (not removed)

Used to study proprioceptive contribution to movement control & coordination

Research example: Verschueren et al. (1999) cited in Magill & Anderson (2017)

- Vibrating biceps/anterior deltoid altered arm trajectory in circle drawing

- Showed disrupted spatial accuracy and inter-limb coordination

- Vibrating biceps/anterior deltoid altered arm trajectory in circle drawing

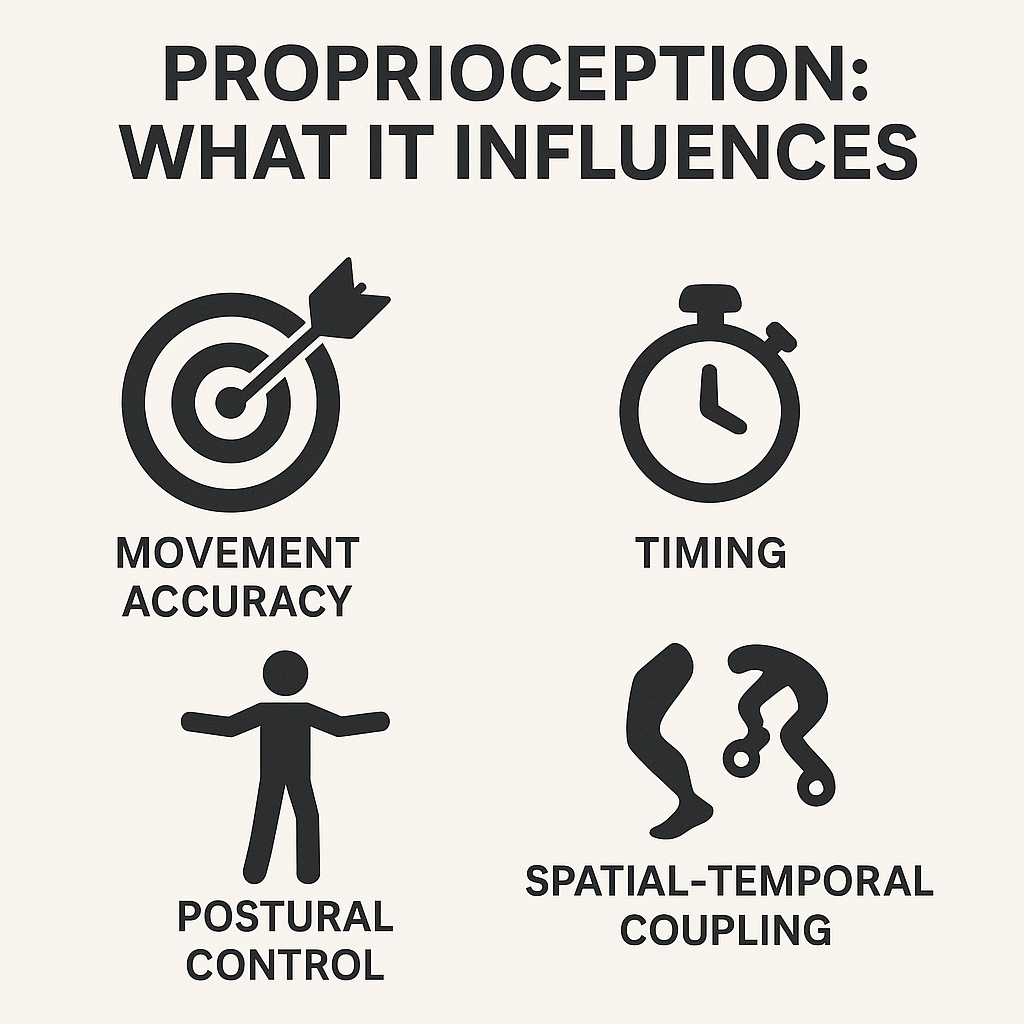

0.12 ⚙️ Proprioception: What It Influences

- Movement accuracy

- Critical for spatial & temporal precision

- Errors increase without proprioceptive feedback

- Critical for spatial & temporal precision

- Timing

- Influences onset of motor commands

- Coordinates sequencing of limb actions

- Influences onset of motor commands

- Coordination

- Supports segmental coupling within and across limbs

- Ensures smooth multi-joint movement patterns

- Supports segmental coupling within and across limbs

Major takeaways: Objectives 1-4

- Both provide critical feedback for motor control

- Touch: important for object manipulation and fine motor skills

- Proprioception: essential for movement accuracy, timing, coordination, postural control

- Loss or distortion of either impairs motor performance

- CNS integrates tactile and proprioceptive info for smooth, coordinated actions

- Both are vital for skilled movement execution

- Understanding their roles aids in rehabilitation and skill training

- Next: Vision & Motor Control

Slides for objectives 5-8 are coming soon!

Objectives 5-8

- Summarize key eye anatomy and visual pathways for motor control

- Explain methods to study vision in action (eye tracking, occlusion)

- Discuss binocular vs. monocular, central vs. peripheral vision

- Describe perception–action coupling, online visual corrections, and tau

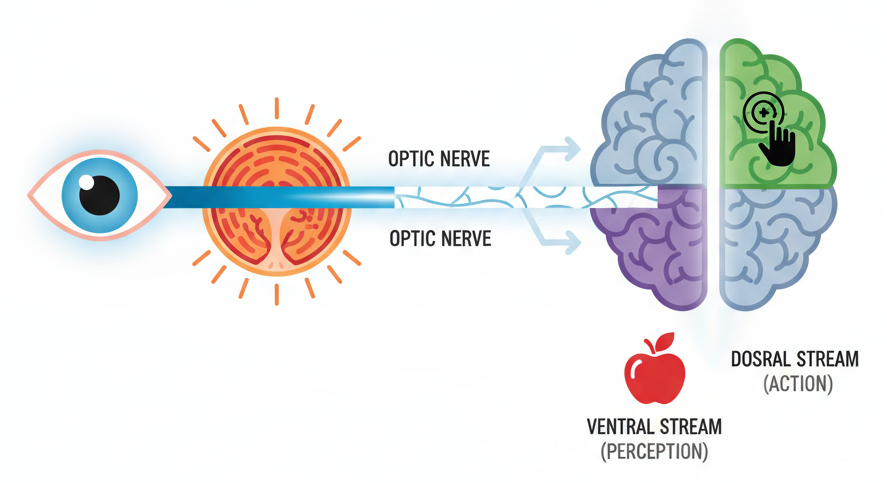

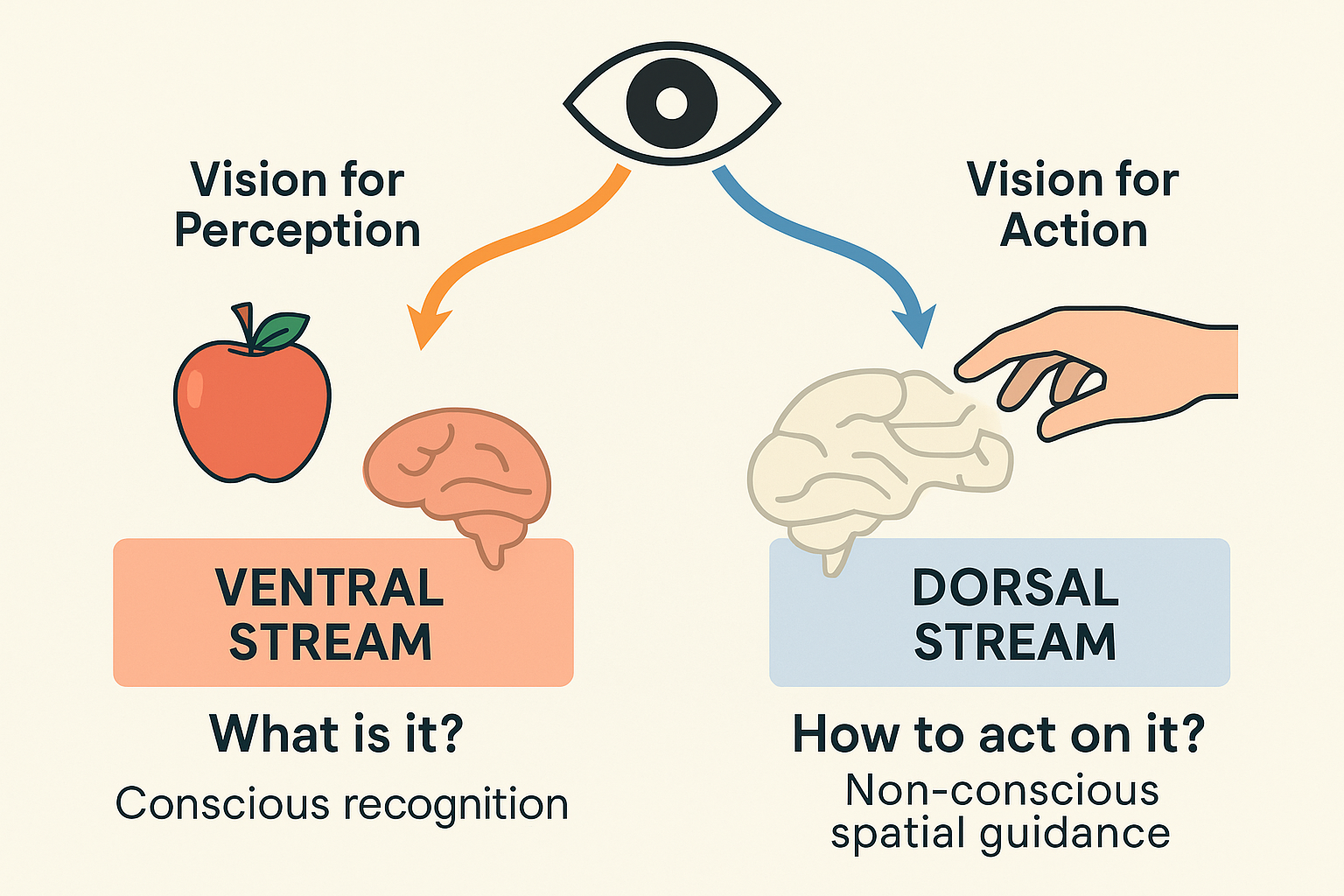

0.13 👁️ Vision: Neurophysiology (Overview)

- Eye → Retina → Optic nerve → Subcortical & Cortical pathways

- Retina: photoreceptors (rods & cones) transduce light → neural signals

- Parallel processing streams:

- Ventral (“vision-for-perception”) → object identification (form, color, detail)

- Dorsal (“vision-for-action”) → spatial guidance & movement control

- Ventral (“vision-for-perception”) → object identification (form, color, detail)

- Integration with motor areas enables targeting, tracking, and corrections

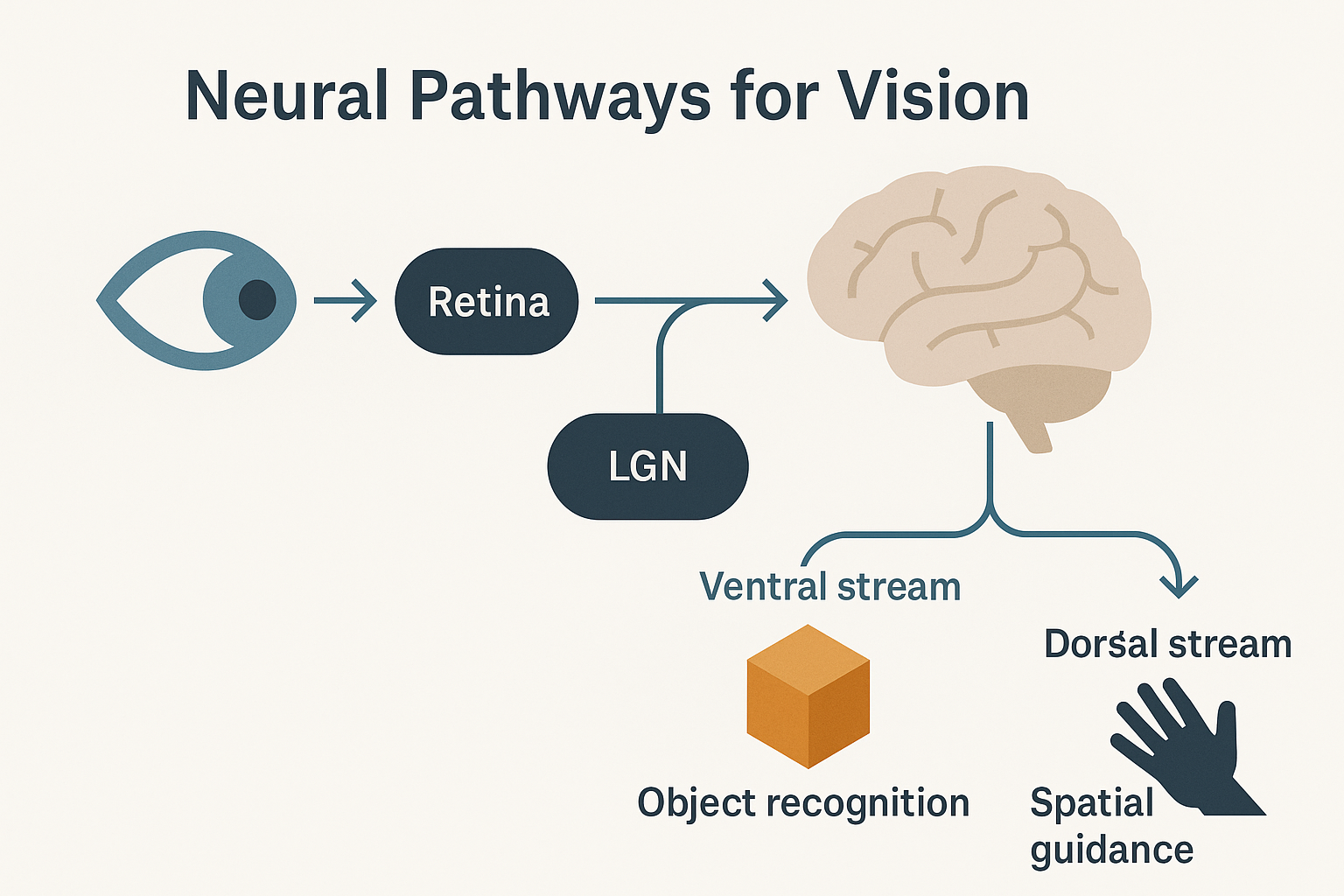

0.14 👁️ Neural Pathways for Vision (Overview)

- Light enters the retina, photoreceptors transduce light into neural signals

- Signals travel via the optic nerve and cross at the optic chiasm

- Relayed to the lateral geniculate nucleus (LGN) of the thalamus

- Projected to the primary visual cortex in the occipital lobe

- From the cortex, information diverges into two parallel streams:

- Ventral stream (vision-for-perception): object recognition and identification

- Dorsal stream (vision-for-action): spatial guidance and movement control

- Ventral stream (vision-for-perception): object recognition and identification

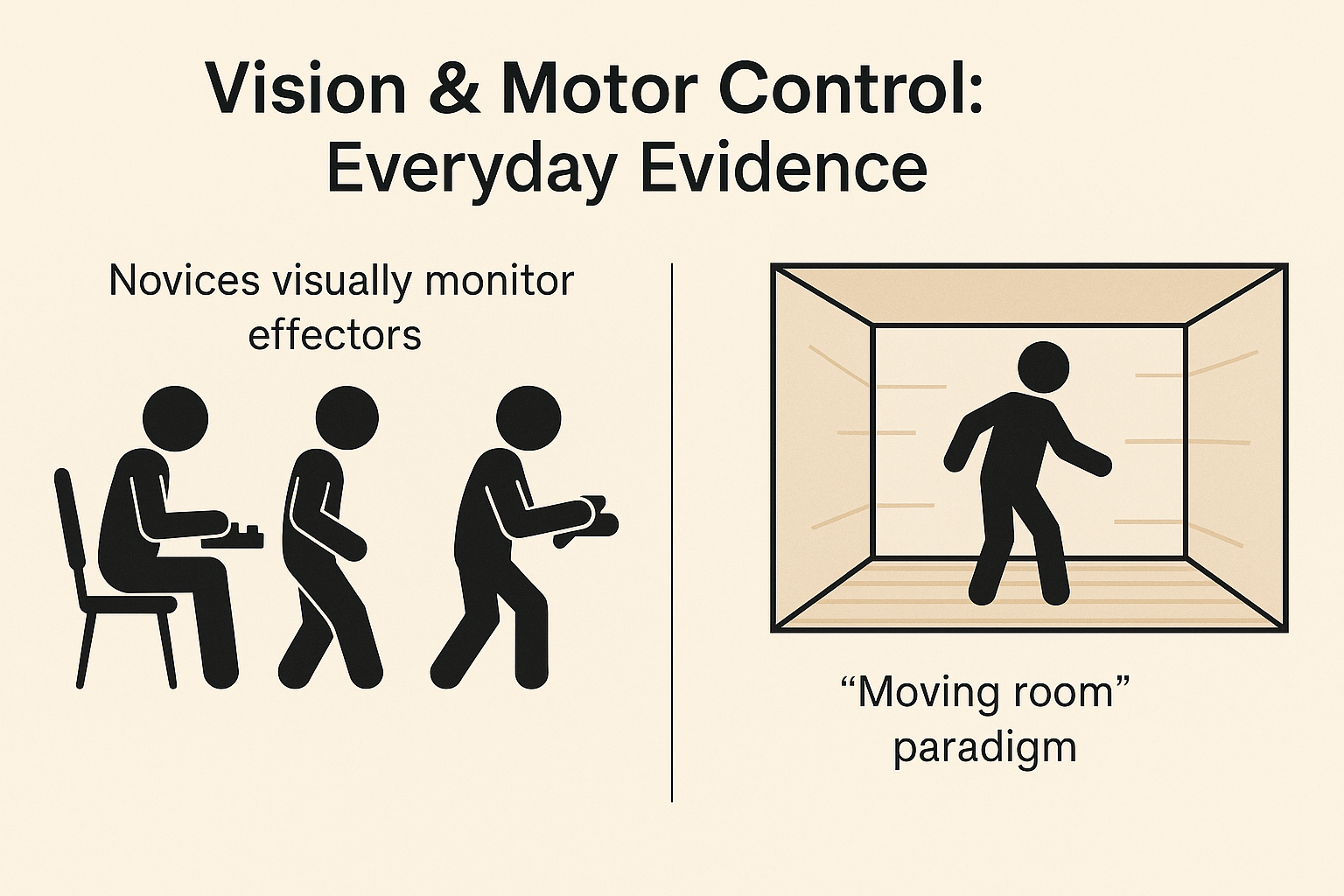

0.15 👀 Vision & Motor Control: Everyday Evidence

- Novices rely on vision to monitor effectors

- Typists looking at their fingers

- Dancers watching their feet

- New drivers visually scanning every control

- Typists looking at their fingers

- With skill development, reliance on vision decreases as tactile and proprioceptive feedback increase

- Classic “moving room” paradigm (Lee & Aronson)

- Visual cues can override proprioceptive and vestibular information

- Demonstrates visual dominance in postural control

- Visual cues can override proprioceptive and vestibular information

- Everyday life: vision provides continuous reference for balance, posture, and spatial orientation

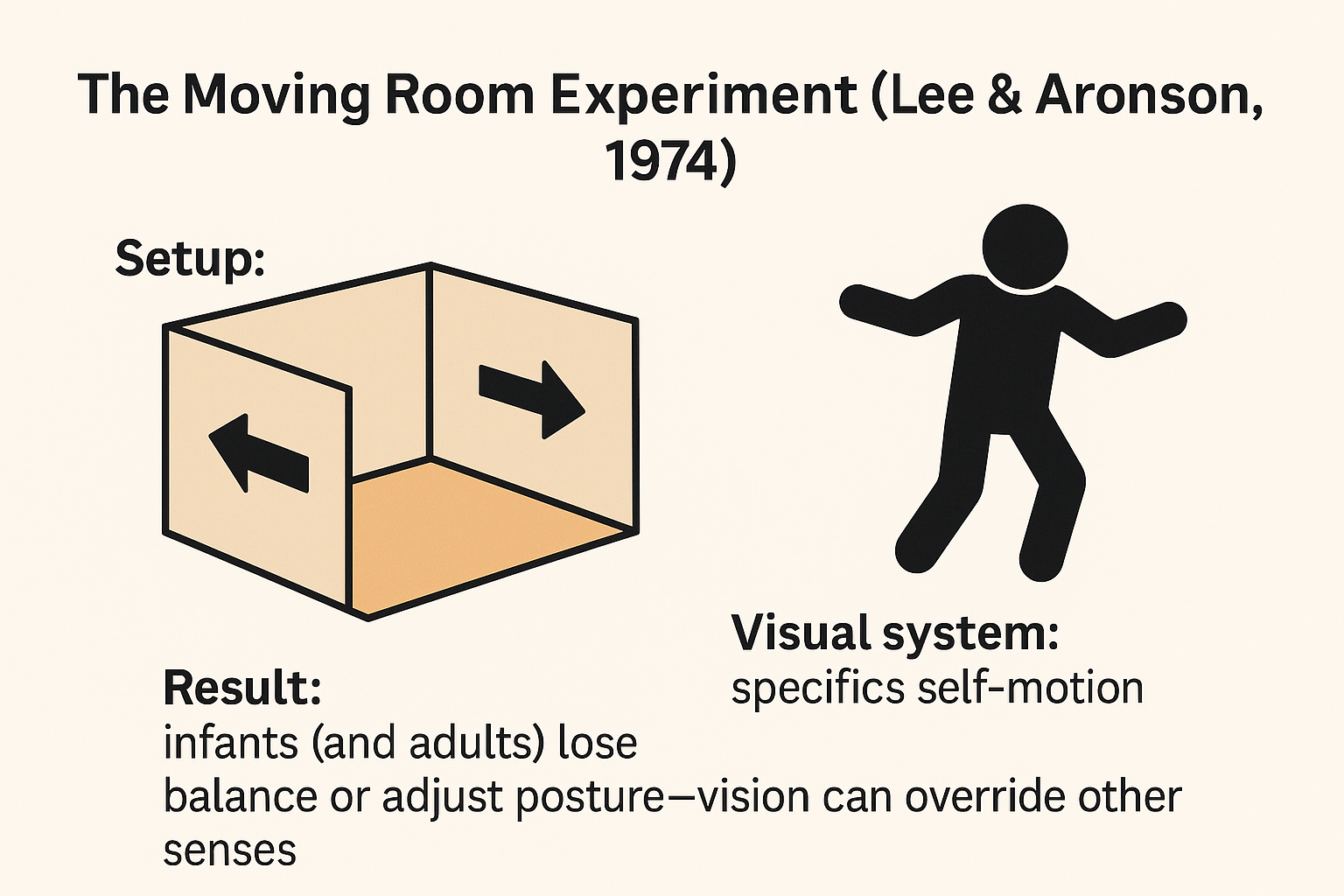

0.16 🏠 The Moving Room Experiment (Lee & Aronson, 1974 cited in Magill & Anderson (2017))

- Setup:

- Walls and ceiling of the room move back and forth

- Floor remains completely stationary

- Walls and ceiling of the room move back and forth

- Conflict of sensory information:

- Visual system: signals self-motion

- Vestibular & somatosensory systems: signal no movement

- Visual system: signals self-motion

- Findings:

- Infants and adults sway, stumble, or lose balance

- Demonstrates visual dominance—vision can override other senses in postural control

- Infants and adults sway, stumble, or lose balance

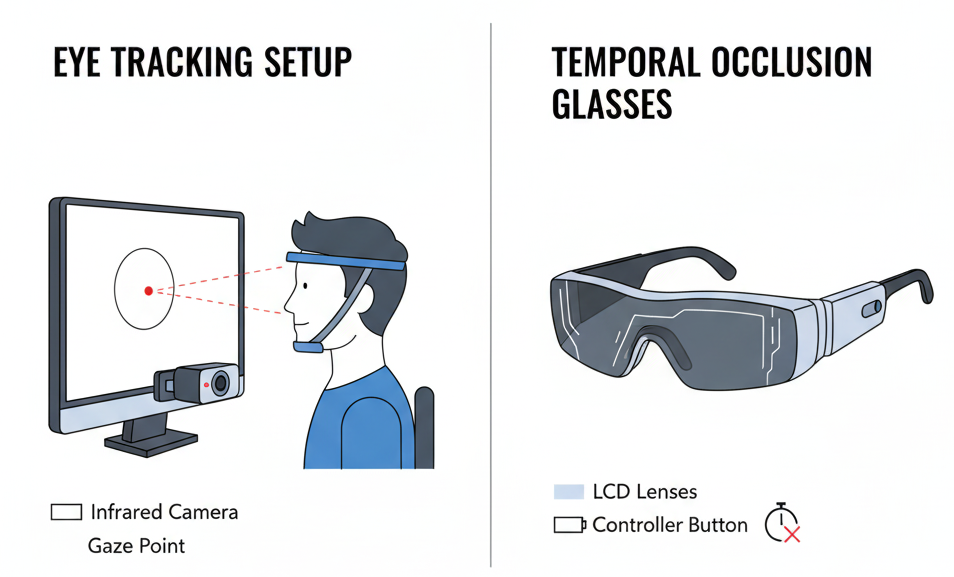

0.17 🎥 Studying Vision in Action (1): Methods

- Eye-movement recording

- Tracks where the eyes are looking and for how long

- Identifies point of gaze (foveal vision) during skill performance

- Tracks where the eyes are looking and for how long

- Temporal occlusion techniques

- Stop video at different time points to test when critical information is detected

- Use of liquid-crystal spectacles (PLATO glasses) to control viewing windows in real time

- Stop video at different time points to test when critical information is detected

- Provide insights into how vision is used for anticipation, decision-making, and movement control

0.18 🎥 Studying Vision in Action (2): Methods

- Event occlusion techniques

- Mask selected features of the performer (e.g., arm, racquet) or the environment in video or film sequences

- Prevents the observer from seeing certain critical cues

- Mask selected features of the performer (e.g., arm, racquet) or the environment in video or film sequences

- Purpose

- Identifies which specific visual information performers rely on

- Determines when this information is used during performance

- Identifies which specific visual information performers rely on

- Provides insight into visual strategies for anticipation, decision-making, and motor skill execution

Refer to figure 6.8 in the textbook Magill & Anderson (2017) for an example of event occlusion in a tennis serve.

0.19 👁️ Role of Vision in Motor Control: Key Aspects

- Monocular vs. Binocular vision

- Binocular vision improves depth perception and movement accuracy

- Monocular vision reduces efficiency, especially at greater distances

- Binocular vision improves depth perception and movement accuracy

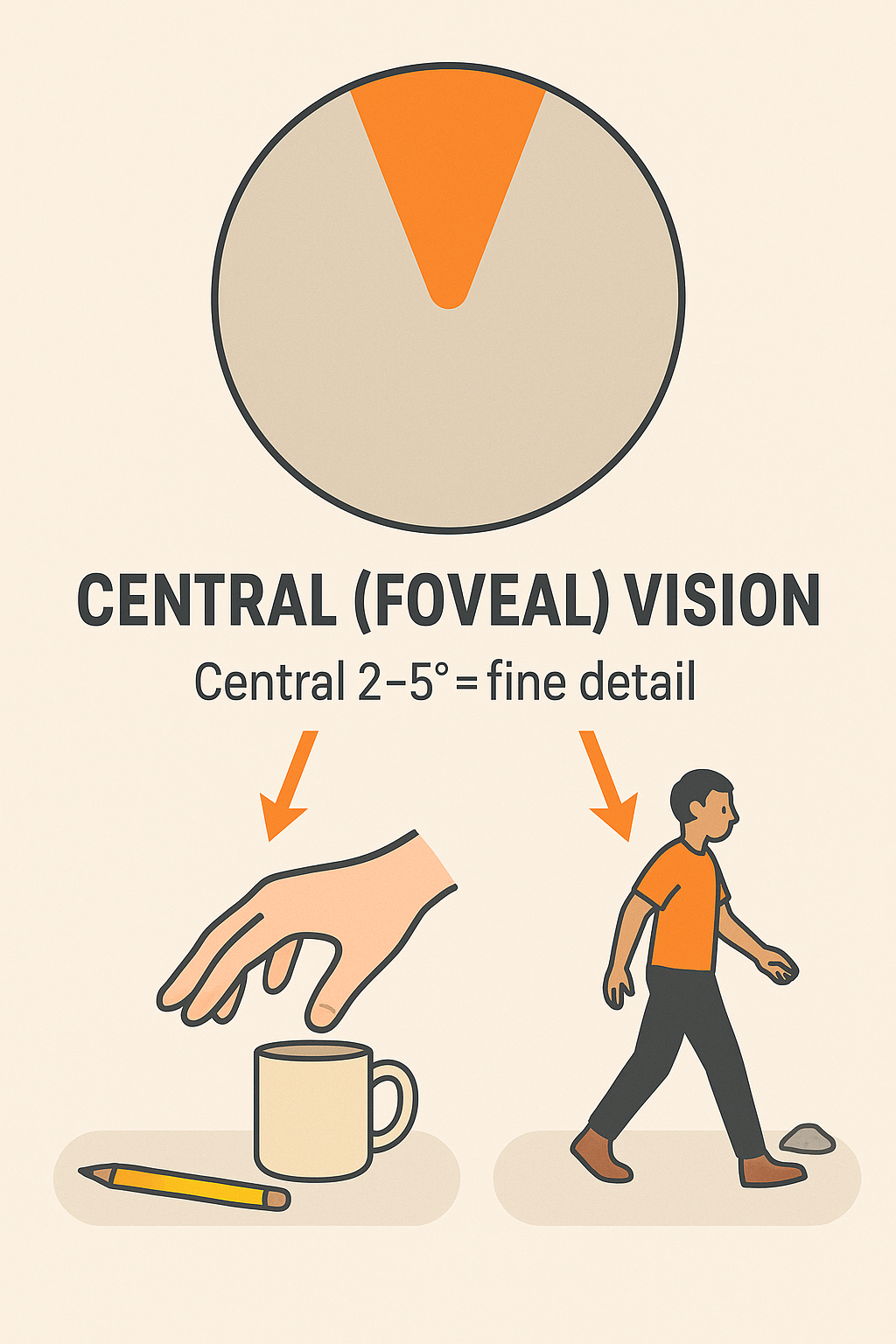

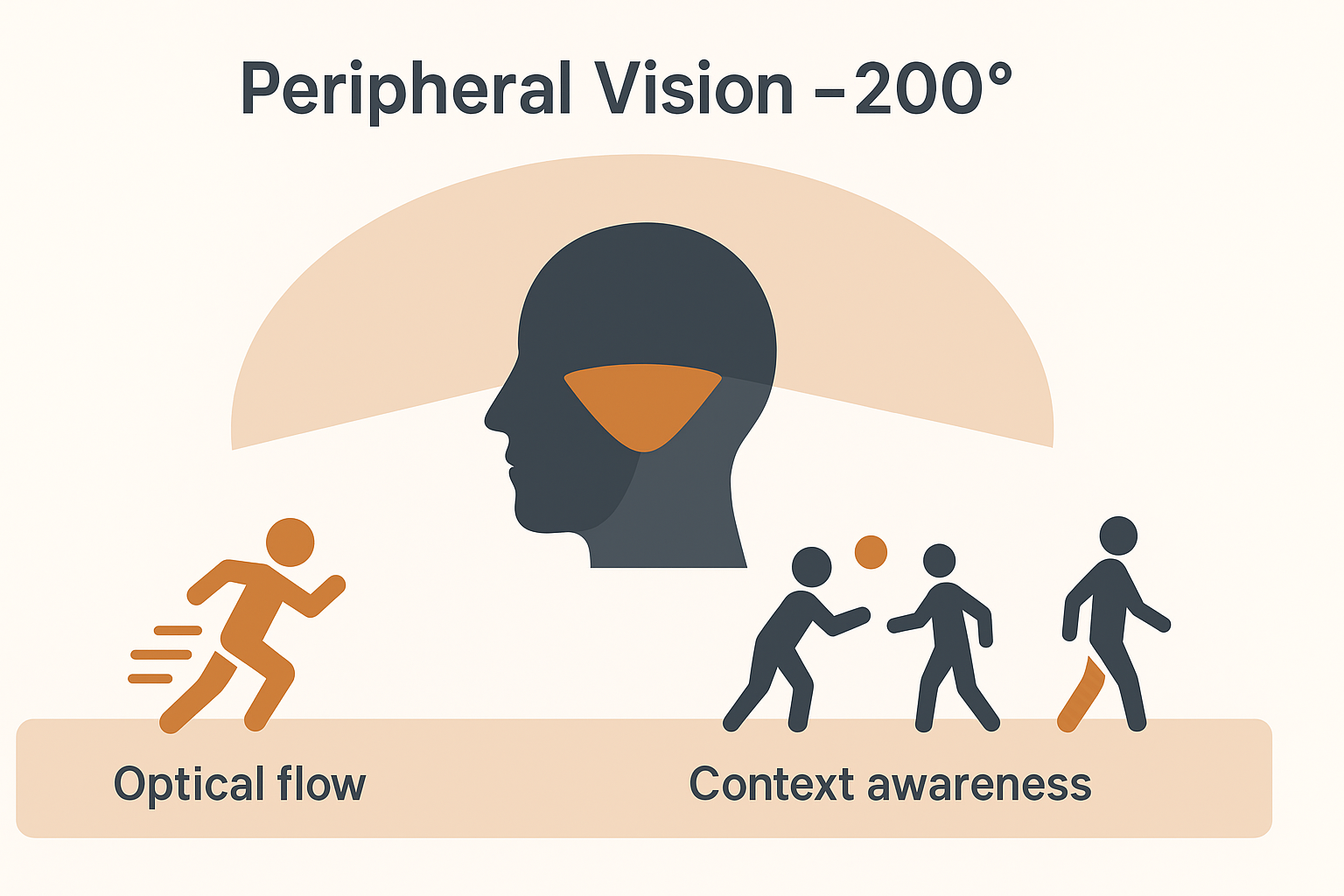

- Central vs. Peripheral vision

- Central (foveal) vision detects fine detail and object features

- Peripheral vision provides spatial context, guiding locomotion and posture

- Central (foveal) vision detects fine detail and object features

- Two visual systems

- Ventral stream (vision-for-perception): recognition, description

- Dorsal stream (vision-for-action): spatial guidance, movement control

- Ventral stream (vision-for-perception): recognition, description

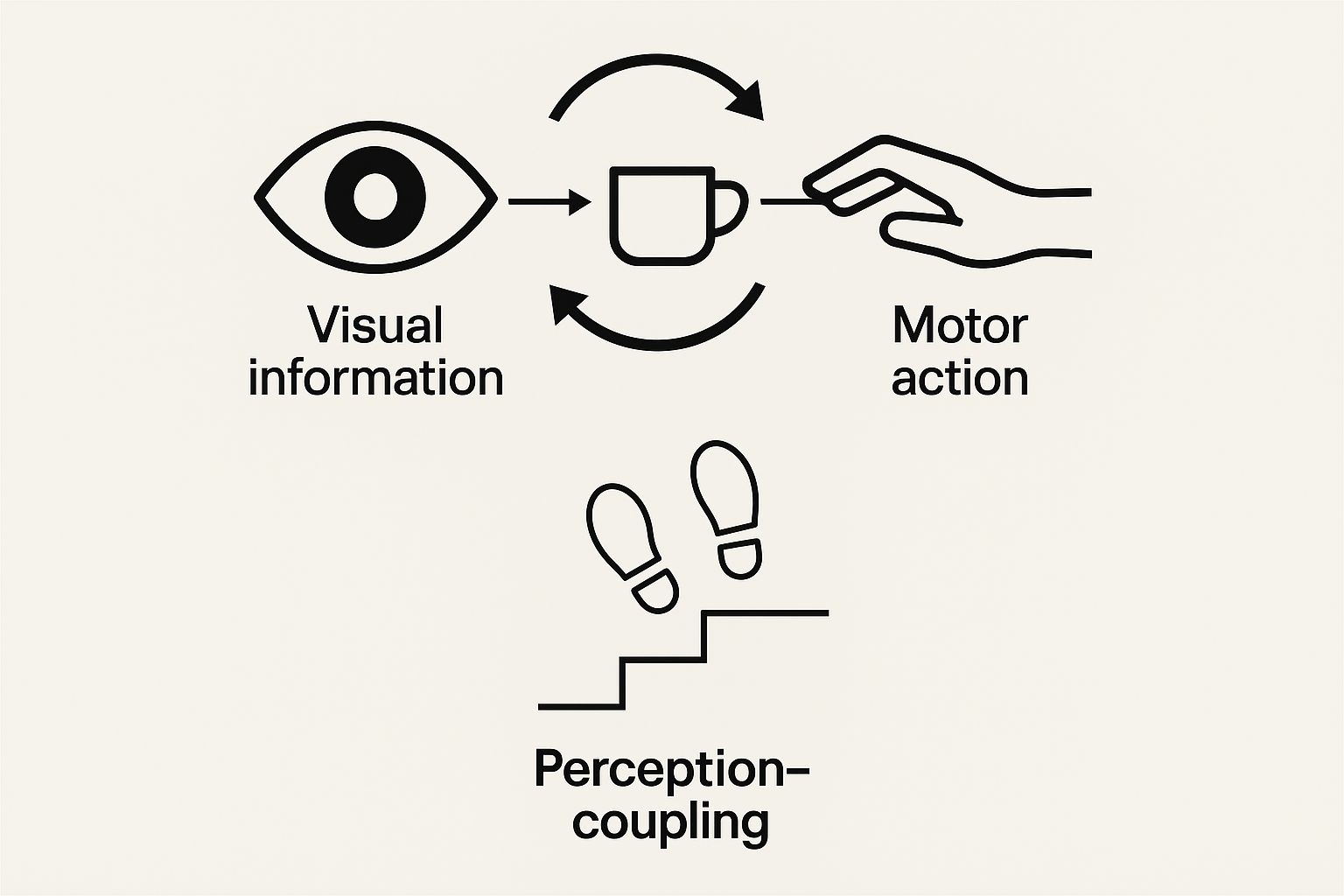

- Perception–action coupling

- Visual information is tightly linked with motor execution

- Time course of corrections

- Vision-based adjustments typically require ~100–160 ms

- Time-to-contact (tau)

- Optical variable tau specifies when to initiate or adjust action

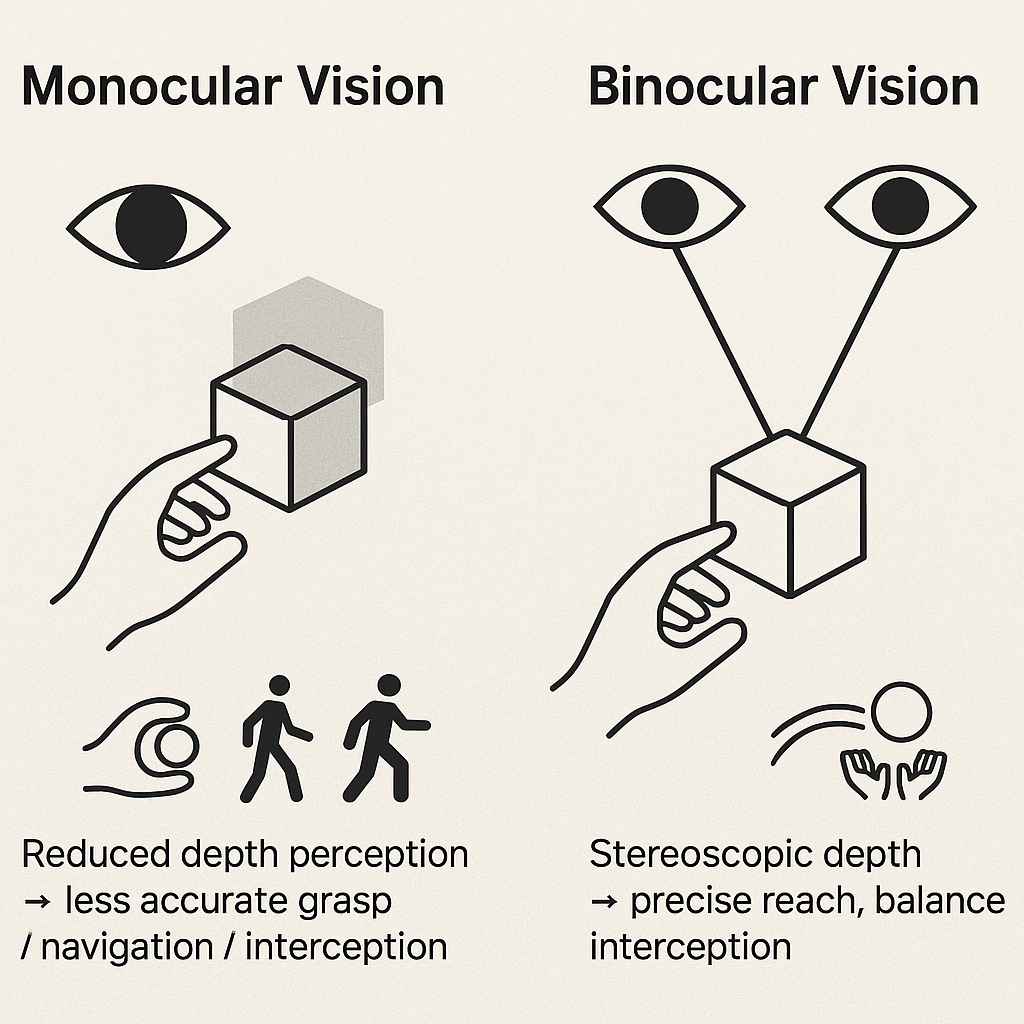

0.20 👀 Monocular vs. Binocular Vision

- Binocular vision

- Provides depth perception and 3D spatial accuracy

- Critical for:

- Reaching and grasping objects

- Walking through cluttered or uneven environments

- Intercepting moving objects (e.g., catching, hitting)

- Reaching and grasping objects

- Provides depth perception and 3D spatial accuracy

- Monocular vision

- Can support performance but with reduced accuracy and efficiency

- Leads to underestimation of distance and object size

- Errors more pronounced as distance to target increases

- Can support performance but with reduced accuracy and efficiency

0.21 🎯 Central (Foveal) Vision

- Covers the central ~2–5° of the visual field (foveal vision)

- Responsible for detecting fine detail and specific features

- Provides critical information to support action goals:

- Reaching & grasping

- Detects object regulatory conditions (size, shape, orientation)

- Guides grip formation and movement trajectory

- Detects object regulatory conditions (size, shape, orientation)

- Locomotion

- Supplies precise path and obstacle details

- Supports accurate foot placement and navigation

- Supplies precise path and obstacle details

- Reaching & grasping

0.22 👁️ Peripheral Vision

- Extends across ~200° of the visual field

- Provides contextual information beyond the central 2–5°

- Contributes to perception of own limb movement during actions

- Essential for optical flow:

- Pattern of motion across the retina created by self-movement

- Guides posture, locomotion, and orientation in space

- Pattern of motion across the retina created by self-movement

- Supports navigation through the environment and coordination with moving objects or people

0.23 👓 Two Visual Systems

- Vision-for-Perception (Ventral stream)

- Pathway: visual cortex → temporal lobe

- Fine analysis of visual scene: form, color, features

- Supports object recognition and description

- Information is typically conscious

- Pathway: visual cortex → temporal lobe

- Vision-for-Action (Dorsal stream)

- Pathway: visual cortex → posterior parietal lobe

- Provides spatial characteristics of objects and environment

- Guides movement planning and online control

- Processing often occurs non-consciously

- Pathway: visual cortex → posterior parietal lobe

- Streams operate in parallel → perception and action are supported simultaneously

0.24 🔗 Perception–Action Coupling

- Perceptual information and motor actions are tightly connected

- Visual perception continuously informs movement parameters

- Eye–hand coordination as a classic example:

- Spatial and temporal features of gaze align with limb kinematics

- Point of gaze typically arrives at the target before the hand

- Spatial and temporal features of gaze align with limb kinematics

- Coupling ensures movements are adjusted online to match environmental demands

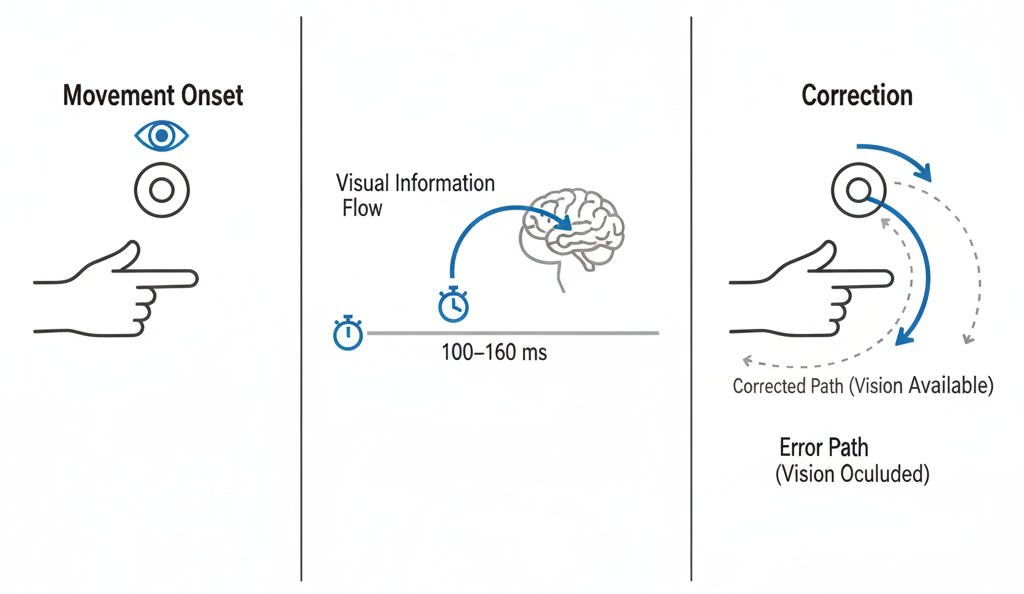

0.25 ⏱️ Online Visual Corrections: Time Required

- Experimental approach

- Compare rapid aiming when target is visible vs. occluded after movement onset

- If vision is available, corrections can be made mid-flight

- If vision is removed, errors increase

- Compare rapid aiming when target is visible vs. occluded after movement onset

- Time window for corrections

- Visual feedback requires ~100–160 ms to process

- Corrections possible only if movement duration allows this window

- Visual feedback requires ~100–160 ms to process

- Implications

- Fast, ballistic movements often too brief for corrections

- Slower or sustained movements benefit from visual feedback adjustments

- Fast, ballistic movements often too brief for corrections

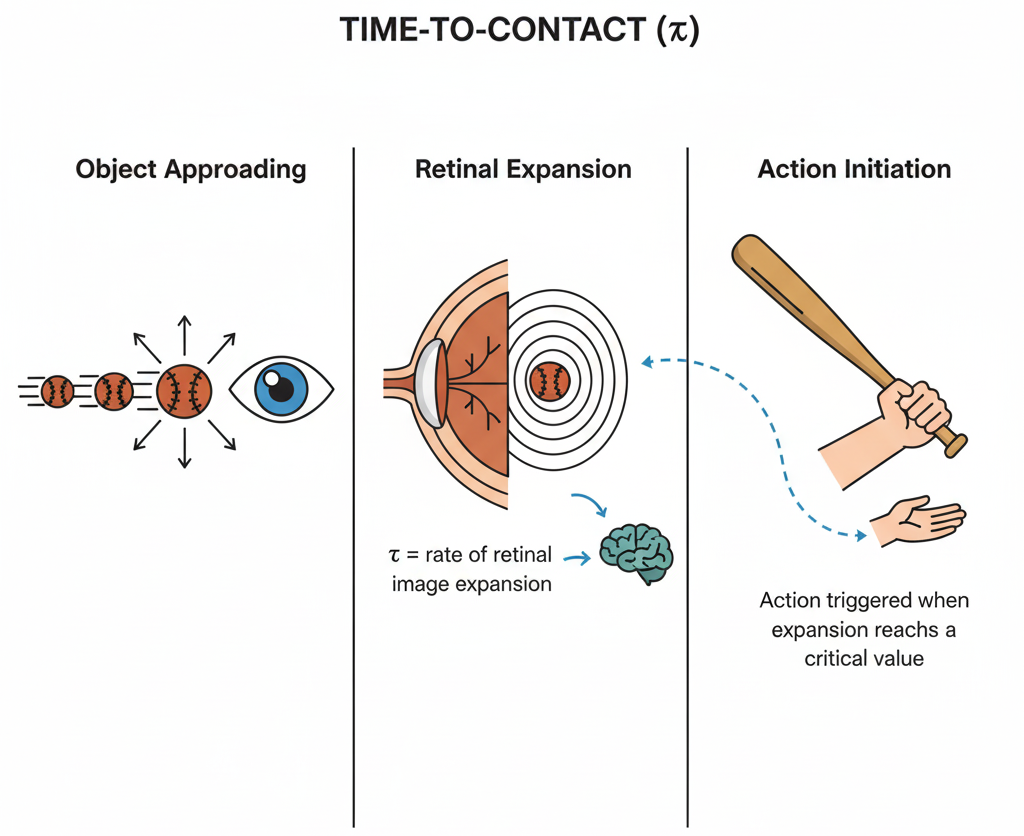

0.26 ⏳ Time-to-Contact (τ)

- In interception and avoidance tasks, vision specifies when to initiate action

- Optical variable tau (τ):

- Derived from the rate of expansion of an object’s image on the retina

- Provides a direct estimate of time remaining until contact

- Derived from the rate of expansion of an object’s image on the retina

- At a critical expansion rate, action is automatically triggered (non-conscious)

- Allows precise movement initiation in dynamic contexts:

- Catching or hitting moving objects

- Avoiding oncoming obstacles

- Timing steps or braking when approaching surfaces or vehicles

- Catching or hitting moving objects

✅ Major Takeaways (Ch. 06)

Touch & Proprioception (Obj. 1–4)

- ✋ Tactile feedback supports accuracy, timing, consistency, and force regulation; anesthetizing fingertips degrades performance

- 🧩 Proprioceptors (muscle spindles, GTOs, joint receptors) signal length/velocity, tension, and joint position → essential for posture, coordination, and movement correction

- 🧪 Classic methods: deafferentation (loss of afferents) and tendon vibration (illusory lengthening) reveal proprioception’s role

- 🏥 Training & rehab: task-specific practice that enriches cutaneous + proprioceptive feedback improves skill and recovery

- 🧠 Pathways: Retina → LGN → V1 → ventral (perception) and dorsal (action) streams running in parallel

Vision & Motor Control (Obj. 5–8)

- 🔭 Monocular vs. binocular: binocular depth boosts 3D tasks (reach–grasp, navigation, interception)

- 🎯 Central vs. peripheral: central = fine detail/regulatory conditions; peripheral = context, limb motion, optical flow for posture/locomotion

- 🔗 Perception–action coupling: gaze timing aligns with limb kinematics; eyes arrive before the hand

- ⏱️ Online corrections: vision-driven adjustments emerge in ~100–160 ms—fast moves rely more on feedforward

- ⌛ Time-to-contact (τ): retinal image expansion rate specifies when to initiate action, often non-consciously

So what?

- The CNS integrates touch, proprioception, and vision to plan, guide, and evaluate movement

- Effective instruction/rehab leverages the right sensory cues for the task (detail vs. context; depth; feedback timing)

- Designing practice that matches sensory demands (visibility, textures, loads, speeds) accelerates learning and recovery

0.27 💡 Points to the Practitioner

🔍 Assess Sensory Deficits - Movement problems may stem from touch, proprioception, or vision deficits - Examples: - Poor balance post-stroke may indicate proprioceptive loss, not just weakness - Gait instability could reflect somatosensory rather than motor deficits

👁️ Use Vision as Compensatory Strategy - Clients rely on vision to substitute for compromised sensory systems - PT Applications: - Mirror feedback for posture training - Visual targets for reaching exercises - Gait training with floor markers/visual cues

🎯 Optimize Visual Attention - Direct central vision appropriately for motor tasks - Clinical Examples: - “Look at the target” during functional reaching - Eye-hand coordination in ADL retraining - Visual tracking exercises for sports return

⏰ Consider Processing Time - Corrections require sufficient time for sensory-motor integration - Rehabilitation Applications: - Slow movement speeds for neurological clients - Adequate reaction time in fall prevention training - Progressive speed increases in sports rehab

0.28 🏥 Additional Clinical Considerations

🩺 Special Populations - Diabetic neuropathy → tactile feedback loss affecting balance/gait - Joint replacement → altered proprioception requiring retraining - Parkinson’s disease → reduced proprioceptive processing - Multiple sclerosis → variable sensory deficits affecting motor control

🎯 Advanced Training Techniques - Closed-eye balance training → force proprioceptive reliance - Dual-task training → divided attention challenges - Sensory integration exercises → combine multiple sensory inputs - Perturbation training → unexpected challenges to reactive systems

🧠 Cognitive & Environmental Factors - Age-related changes → slower processing, reduced acuity - Medication effects → sedation, dizziness impacting integration - Cognitive load → reduce complexity for motor learning - Attention deficits → impact sensory-motor coupling

🌍 Environmental Modifications - Lighting optimization → improve visual input quality - Surface texture → enhance tactile/proprioceptive feedback - Visual contrast → aid object detection and depth perception - Noise reduction → minimize sensory distractions

References

Download | Ovande Furtado Jr., Ph.D. | CSUN | KIN Department | KIN479 Motor Control | Course Site